Who Really Pays for Tariffs? AI Might Finally Convince You

Future Work | A new study shows AI is better at changing minds than facts alone. Could it fix political misinformation?

Living through the Trump and Brexit years, I have seen firsthand how populist rhetoric and social media eroded meaningful debate. Misinformation surged, political discourse turned angry, and expertise lost ground to hot takes.

I saw this firsthand in my own family. A family member, let’s call him Ted, went right down the rabbit hole. It was probably my fault. I had attempted to simplify photo-sharing and suggested we shift from email to a modern platform. On my advice, Ted downloaded Facebook Messenger.

Ted had always believed in aliens but social media turned this into an obsession. Push notifications plus Facebook groups fed his anxiety and mistrust. “Everyone I talk to believes me.” When COVID came, it was an “inside job.” Vaccines had chips in them. I started to receive less sympathy for the punishing hours I put into being a founder of an LGBTQ+ fintech company.When he said, “Isn’t it funny how hard it is for straight white people to get work these days?” I wondered if I had lost him altogether.

I was willing to let things slide, but I really wanted him, an elderly man, to get a vaccine. I would try and show him “the truth.” Expert opinions, medical journals—nothing convinced him. “This is what they want you to think.” Rational, data-led tools didn’t work.

That appears to have changed, based on a new 2025 white paper: Artificial Influence: Comparing the Effects of AI and Human Source Cues in Reducing Certainty in False Beliefs. It looks as though AI could have a critical role in managing misinformation at scale.

How Did We Get Here?

Like Ted, many of us are in algorithmically created, hermetically sealed “information bubbles.” Social media matches us to content designed to stimulate emotional and intellectual pleasure centers. The more we liked, the smaller our world became. Within these echo chambers, misinformation thrived. Alternative voices became quieter. Rational voices weren’t just ignored—they became unwelcome.

For years, Ted had believed in aliens. After Facebook, his beliefs hardened. He didn’t just believe that aliens existed, he was certain. There was a secret world order (of lizard people), Chaired by Queen Elizabeth (long live the queen). To Ted, there was an intricate world, hidden beneath the surface.

I am many things—among them, an outspoken, often stubborn person who in my youth enjoyed being “correct” more than being “right.” This mix is the ideal “other” to reinforce populist thinking. I fell straight into the trap. My graphs, expert advice, data, news reports—nothing convinced Ted. They became symbols of my own cult of ideas.

How to change a conspiracy theorist’s mind

Conspiracies thrive on powerlessness and uncertainty. When people cannot explain complex world events, we grasp for answers. These ideas become entwined with identity, reinforced and policed by online communities.

Just as conspiracy belief is multi-pronged, by knowing the nature of the belief we can develop matching, multi-pronged strategies that provide a positive context to challenge the idea, and take both the message and the messenger into account. Expert opinions are pretty good at doing this, the problem is they’re not scalable. There aren’t enough experts to go around, but there’s plenty of AI these days…

Can AI Make This Better?

A fascinating study by Goel et al. (2025) tested whether AI, peers, or experts were more effective at reducing false beliefs. Participants, all holding at least one misinformation-based belief, were not aware to whom they were speaking with.

The results were fascinating. While people were most likely to believe experts, conversations with AI led participants to feel less certainty about misinformation. Around three in ten abandoned them altogether.

The novel approach, using LLMs, meant the conversation wasn’t confrontational. When I had tried to convince Ted with expert opinions, he felt judged. In the study, AI conversations allowed participants to examine and reconsider beliefs without feeling attacked. See the following example about a strongly held belief regarding Russian collusion in 2016.

Participants were even more receptive to AI than peers. This means that the new frontier for combating misinformation and improving public discourse could be AI enabled. Have we identified a potential antidote to misinformation-driven populism?

How this could expand to political misinformation (and help Ted)?

This reveals something crucial: if we want to help change populist beliefs, we must present contrary evidence in an environment where people feel safe questioning their own certainty.

When I bombarded Ted with facts, I strengthened his resistance. The AI approach, by contrast, offers patience, consistency, and no judgment.

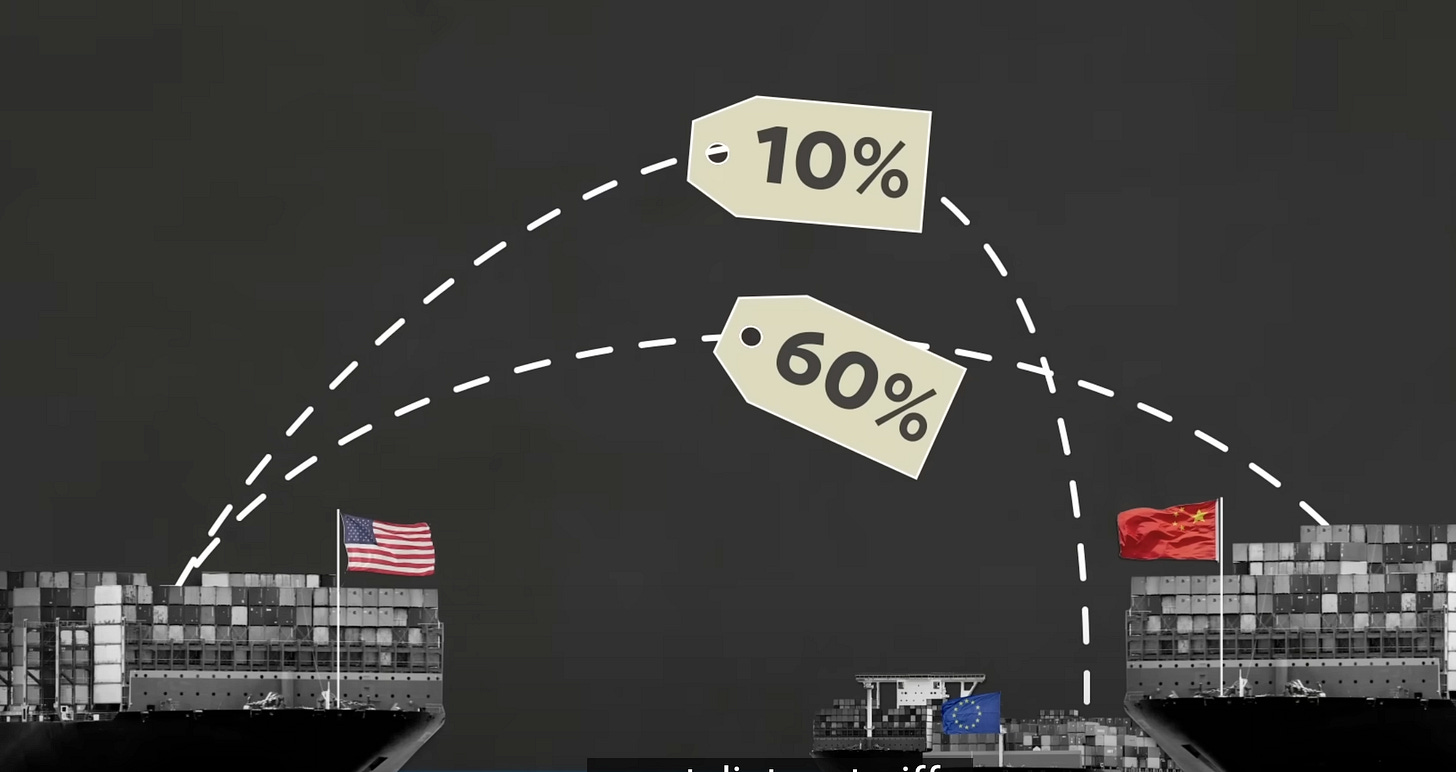

The study found that the stronger a participant believed misinformation (for example, that foreign countries pay tariffs, not consumers) the more likely they were to reconsider after talking to an AI - the opposite effect to bombarding them with data alone.

Practical Applications and Future Potential

Imagine if Ted, instead of falling into Facebook’s rabbit hole, had access to an AI buddy that could chat with him about his beliefs—not to shut them down, but to explore them together, questioning gently, and showing alternative perspectives in a way that didn’t trigger defensiveness.

This is a step beyond the (largely unfashionable) fact-checking programs that Meta, X and others have deprioritized. Proactive AI-driven tools like chatbots could identify unhealthy beliefs early. By integrating with social media platforms, search engines, and messaging apps we could offer real-time, gentle counter narratives.

Unlike human moderators (poor things), AI doesn’t get tired, doesn’t argue emotionally, and would have access to the world’s knowledge to fight back. The challenge? These same AI tools could just as easily be used by bad actors to reinforce misinformation instead of combating it. We’d therefore need new guardrails to prevent AI from becoming another tool for political manipulation.

Conclusion

My experience with Ted taught me that combating misinformation isn’t just about presenting facts. We’re most effective helping people question their own beliefs without feeling threatened. As the Goel study shows, we could possibly achieve this at scale with the current generation of LLMs,

The path forward isn’t about replacing humans but supplementing them with AI tools that are effective at fostering doubt, encouraging reflection, and providing a safe and reasonable counterpoint when people are most vulnerable to misinformation - probably alone, on their phones.

If social media fueled our misinformation crisis, AI, with the right guardrails, could help lead us out, one conversation at a time.