You Don’t Deserve My Data: The Delusion of AI Founders

Why early stage AI founders struggle to earn consumer data and how consumer psychology can help them build trust from day one.

Many AI founders think users will hand over their data because they believe in the mission. They won’t.

Your team and investors care about your mission. Your customers don’t. They care about what you give them today.

A fintech CEO told me her biggest challenge was getting a critical mass of users to connect their bank accounts via Plaid. Until the model had around a thousand users, it couldn’t generate distinctive value.

That sentence should make you uncomfortable.

Her vision was big, but her value exchange was vague. She was waiting for the model to discover what mattered most.

The founder looked tired and frustrated. “But our mission is so important—why won’t they give us their data?” she said.

Over the past few months, I’ve been coaching first-time founders building LLM products. I keep seeing the same pattern: no customers without data, no value without customers. This is the quiet delusion of consumer AI founders right now.

Mission ≠ Value Exchange

Vision can motivate a team, but only value converts a customer.

I saw the same pattern earlier this year with a child-safety startup where I was advising as a fractional CMO. They wanted to diagnose and treat children’s mental health issues like anxiety, depression, and suicidal ideation by scanning the child’s phone and warning parents in time to take action.

I knew if we were going to get their data we’d need to give people a reason to care.

They brought me in to help them acquire their first 1,000 customers. There was no budget for ads, no incentives, no referral mechanics. I knew if we were going to get their data we’d need to give people a reason to care.

When I asked what we’d offer those customers, they said: “We can’t answer that right now. We just need a thousand users first, and then we’ll be able to figure out what’s possible.”

The founder was confused about what was happening. This was a research proposal, not a go-to-market plan.

Here’s where being a visionary founder can get in the way. People don’t sign for a new product that might eventually solve many problems. They sign up because it actually helps them solve one of theirs. Today.

The Prospecting Model (and Why It Doesn’t Work)

Here’s what both founders were actually doing: They were chasing datasets, not customers. They were using early adopters as unpaid R&D participants, assuming the model could mine enough context to tell them what to build next.

Think of it like panning for gold. You wash through enough dirt, you’ll find some nuggets.

Investors hate this. CFOs hate this. It artificially drives up your CAC and wastes capital acquiring customers you either can’t help or who don’t care enough about how you can help them.

If you want users, you need a value proposition, a job-to-be-done. This is something specific enough that a real person would trade time, money, effort or data to get it.

Designing a Value Loop That Works

Customers will do surprising things if they get value out of it.

The exchange doesn’t have to be perfect, but it has to feel fair. That fairness depends on balancing privacy (loss of control), friction (how hard something is), utility (how useful it feels), and novelty (how interesting it feels).

Fairness = (loss of privacy + friction) - (utility + novelty)

When that balance feels fair, people stay. When it doesn’t, the loop breaks.

To help founders design for fairness, I use a simple four-step tool called the Trust-Value Framework. It helps teams unlock data and build trust at the same time. It’s pretty simple:

Step 1: Map data value and friction. What data do you actually need to drive your model refinements and create user value? How much effort is required to provide it to you?

Step 2: Map novelty and utility. What will the user get instantly in return (insight, feature, emotion, or status)? Could they get this from elsewhere?

Step 3: Test the Fairness Gap. If you subtract friction and privacy loss from novelty and utility, does the trade still feel fair? If not, the loop won’t hold.

Step 4: Design the Bridge. What’s the smallest, most tangible hook that lets users experience value before they trust you?

Get these four steps right and you trigger the loop that powers every successful consumer AI product: Data → Value → Trust → More Data → Better Value

How this works in practice

Applying the Trust-Value Framework in a coaching session pushed a fintech CEO toward a small, tangible bridge: a portfolio diversification score.

Utility: Help target users see concentration risk.

Novelty: Fun, light, even a little gimmicky.

Fairness: Offsets the friction of connecting a bank account.

The score was simple to calculate, didn’t require a large dataset to train, and delivered immediate, actionable insight.

Once users saw their diversification score, they stayed. Once they stayed, they generated more data. More data improved the model, and the value compounded.

The real lesson: Earning trust requires designing one fair, specific exchange that proves your product’s intent from the first interaction.

This Isn’t a New Problem

Here’s the thing: this isn’t some novel challenge that LLMs created. Founders have been making this mistake for decades.

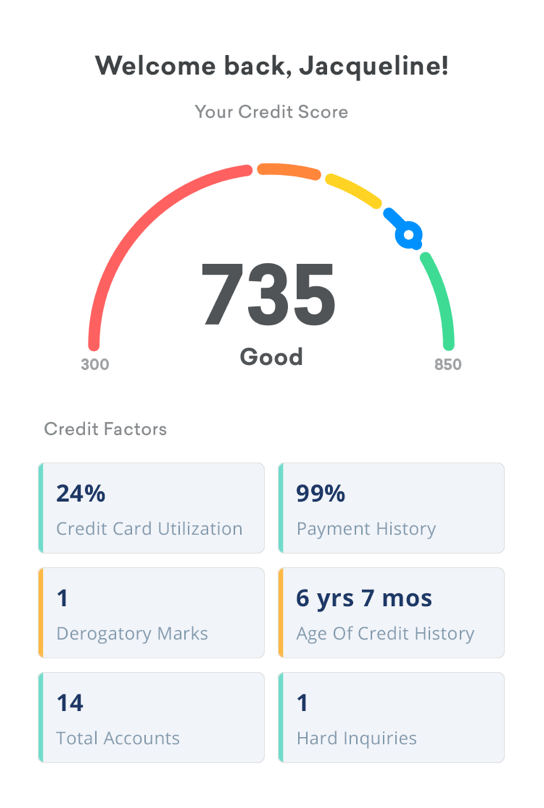

Credit Karma figured this out in 2007. They needed your financial data to make money (selling you tailored credit card and loan offers). But they didn’t lead with “connect all your accounts so we can figure out what to sell you.”

They led with a free credit score.

Once you saw your score, you stuck around. Once you stuck around, you’d connect more accounts. Once they had more data, they could recommend better products. Once the recommendations were good, you’d actually consider them.

Credit Karma didn’t ask for trust. They earned it with one clear, valuable exchange — a free credit score.

They didn’t ask you to hand over everything and hope the algorithm would tell them what to build. They picked one narrow, valuable thing (your score), gave it away for free, and built from there.

The company sold to Intuit for $7 billion. The pattern works.

LLMs Don’t Exempt You From Product Discipline

A big vision is great, but only if it backs into a clear roadmap that can be prioritized down to the feature level. This is, and has always been, a key product discipline.

You still need to define which problem you’re solving, for whom, and why now. The LLM can accelerate your learning, but it can’t replace it.

You still need to define which problem you’re solving, for whom, and why now.

The founders who succeed are using AI to go faster toward customers that they already understand, toward pain points that are clearly understood and specific enough. They aren’t spending money acquiring users they aren’t sure they can help.

If you’re wrestling with connect rates, stalled onboarding, or day‑30 drop‑off, it’s not a data problem. It’s a trust–value problem. I run 4–6 week Trust‑Value sprints to design one fair first exchange, ship three micro‑experiments in week one, and lift your key metric without gimmicks or giveaways. I’ve done this in fintech and safety products; the pattern holds. I’m opening two December sprint slots, reply TRUST or email mr.rob.curtis@gmail.com and I’ll send a 30‑minute scoping link. Let’s earn the data by delivering value first.

Wow, I’m surprised companies do that! It seems obvious that people won’t use a new product if it isn’t immediately useful.

AI without the training data... 😕 an empty box